5 Reasons I’m Changing How I Learn AI (and What My New Approach Looks Like)

I must admit that with the recent wave of Artificial Intelligence (AI), I have felt overwhelmed. Overwhelmed with the fantastic things AI can do - from suggesting my next meal to folding proteins (which I’m still trying to wrap my head around). Overwhelmed by the vast number of AI experts out there that have secretly been studying Machine Learning while I spent time on Systems Integration and at the Kubernetes coalface over the last few years. Overwhelmed by the mountain of information I would have to wade through to get a grasp of AI to avoid certain redundancy by 2030.

With the words of the Chinese philosopher, Lao Tzu, of a "journey of a thousand miles starts with a single step" (not to the confused with the words of "keep walking" by the famous Scotsman, Johnnie Walker) ringing in my head (amidst the resounding sound of old dog barking) not at all intent on learning new tricks, I have set out on my journey to learn about AI.

Based on the reasons set out below, I have changed my approach (quite drastically). If you hear similar voices and sounds in your head and have been keen to learn more about AI, I hope you might find the following helpful and join me in my journey to get a better understanding of this new phenomenon.

Reason #1: Boxes and lines are just boxes and lines

In his book, The Software Architect Elevator, Gregor Hohpe refers to Architects who are able to engage at different levels of the organisation, sometimes at the development level, also known as the “engine room”. Over my career, I have taken great pride of being able to whiteboard a solution architecture and being able to prototype/build it. However, 18 hours into a recent hackathon, I reluctantly had to admit defeat because of the Dunning-Kruger effect.

For reference, the Dunning–Kruger effect is defined as the tendency of people with low ability in a specific area to give overly positive assessments of this ability. Basically, it means that you think you know more than you actually do. Interestingly, it also means that, as an expert in a domain, you feel everyone knows as much as you. For me, it was unfortunately a case of the former and not the latter - it was not as simple as I thought it would be!

Drawing up a solution architecture - after reading a white-paper or watching a re:invent session at 1.5x is one thing. The wave of sheer panic which sets in when you’re not able to connect to that critical graph database you just provisioned in the Cloud is enough to humble and tame the biggest of egos. My ill-preparation for the event taught me a valuable lesson that I should be spending far more time in the engine room.

The humble line between 2 points abstracts a world of complexity. A world that must be understood.

Reason #2: The Copy-Paste Syndrome

One of the biggest dangers I anticipate around the use of Generative AI (GenAI) is that the majority of users simply copy-and-paste the response. By way of example, if a student needs an essay or book review, there might be some effort that goes into the prompt or request. It could be a combination of relief or elation as the generated output appears - as it results in a quick, cursory, almost nonchalant skim. This is potentially the reason why hallucinations (incorrect responses) are often missed.

My first-hand experience of this - even from professionals in the workplace is that references are copied, almost verbatim, from a Large Language Model (LLM). If you’ve ever asked for a code sample from a LLM and went from the dizzy heights of seeing the code you desperately need appear magically before you to the depths of betrayal when you see the compiler error indicating an ‘unknown’ function - you will know what I mean.

If you copy-paste, what value are you adding?

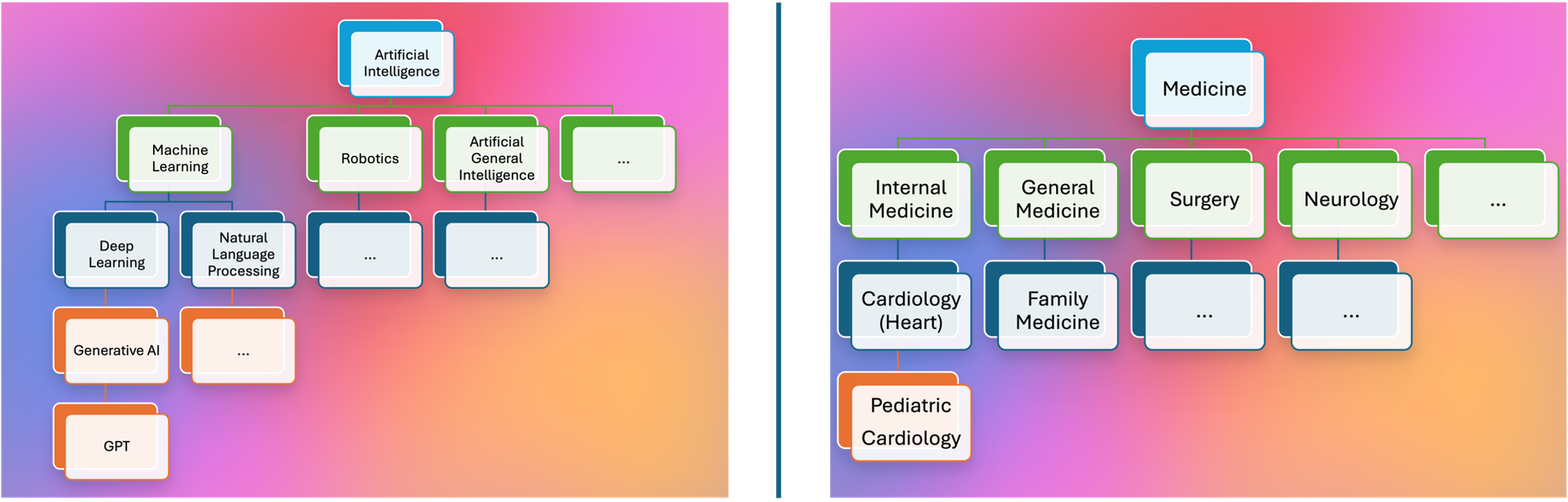

Reason #3: AI is more than ChatGPT

What surprised me as I prepared for an AI Practitioner certification is that ChatGPT, a form of Generative AI, is one of many areas in the field of AI. Like the field of medicine, there are so many different variants and specialities, it is mind-bending. Here's a quick primer:

- Artificial Intelligence (AI) is a wide field focused on creating smart systems that can do tasks usually requiring human intelligence, like seeing, thinking, learning, solving problems, and making decisions. It covers different methods and approaches, such as machine learning, deep learning, and generative AI.

- Machine Learning (ML) looks at methods that make it possible for machines to learn. These methods use data to improve computer performance on a set of tasks.

- Deep learning is a type of machine learning that uses large networks of artificial "neurons" to learn patterns from vast amounts of data. These networks are designed to mimic the way the human brain works, allowing deep learning systems to recognise images, understand speech, and even make decisions by learning from examples.

- Generative AI (GenAI) is a subset of deep learning and traditional ML, and is a general-purpose technology used for multiple purposes to generate new original content rather than finding or classifying existing content.

- Generative Pre-Training Transformer (GPT) is a type of AI that can understand and respond to text, like having a conversation with a human. It’s trained on lots of information from the internet and uses that knowledge to answer questions, explain things, or help with tasks like writing and problem-solving. It works by predicting what comes next in a conversation based on what you ask or say.

What absolutely blew me away is that even AI researchers who create these models cannot explain how connections between "neurons" are made. And this is what scares everyone about AI.

An aspiration to "learn AI" would be equivalent to "learning medicine". You can choose to be a General Practitioner (GP) or a Specialist. Last time I checked, it takes quite a bit of time to become a Doctor...

Reason #4: Certification does not make me an expert

As you might have observed from your LinkedIn feed, there has been a significant increase in “AI-related” posts and associated “AI-experts”. As I prepared for, wrote and obtained my AI Practitioner certification, I felt very much the same way when I completed my first AWS certification and my undergrad Applied Maths major. Eager, book smart, relieved - but still very very much overloaded with the ton of information I had just learnt.

In my opinion, a certification is simply a “ticket to the game”. It helps you understand the rules so you can appreciate the plays. Make no mistake - your seat is high up in the stadium, in the “nose-bleed” section. Before you even dare to venture an opinion, take the time to learn the game a bit better.

Simply understanding the theory (through certification or YouTube) and attempting to have an opinion would be as ludicrous (and equally dangerous) as me reading through recipe books, binge-watching MasterChef and then giving my wife, who has decades of hands-on experience, advice about how to prepare the next family meal.

Beware of the Dunning-Kruger overconfidence effect that comes with a new certification!

(Thanks to Sumika Singh for the Fastest Things on Earth entering my feed).

Reason #5: Application helps me understand

As I researched, I came across excellent (and entertaining) references such as the AlphaGo movie/documentary (on YouTube) which details the journey of the Google DeepMind team that created an AI program to play the game of Go against a world-champion. Although, now more than 7 years old, I highly recommend that you watch it. As much as it is impressive, it is equally chilling. The machine learnt how to play Go - better than any human can. Since then, this team has moved on to create AlphaFold to help understand protein folding - which is so significant that members of the team were awarded the Nobel Prize a few weeks ago.

As much as researching and attempting to understand ground-breaking AI papers, such as Attention Is All You Need, listening to the audible version Mustafa Suleyman’s thought-provoking book, The Coming Wave, and watching Sir Demis Hassabis, Google DeepMind CEO, talk about AlphaFold, I’ve realised that this strategy is a carbon-copy of me attempting to become a culinary chef by studying the family copy of Indian Delights and watching my wife cook.

Which brings me to my approach...

My super-aspirational goal is to learn more about the various AI domains through application. I intend to follow a "hands on" research approach of:

- Defining a problem statement. As much as I would love to, in the interests of time, it will not be as grandiose as AlphaGo or AlphaFold. I will do my best to make it relatable.

- Provide a hypothesis through a solution design (boxes and lines). It is key to note that the design is iterative - it might start simply, then evolve to use different approaches or techniques. If you have any ideas or suggestions, please let me know.

- Then implement it by way of a simple prototype. This is meant to demonstrate the concept - not to go into production. There will be detailed steps and instructions as evidence of the build.

- A key callout is that the design and implementation will be based on Amazon Web Services (AWS) technology. I am an AWS employee and also have access to the services. Happy to get feedback regarding alternate approaches you might have used.

- Finally, a quick analysis of how the proposed solution solved the problem. This could include factors such as cost, complexity, feasibility and scalability.

Here’s a clue about the first problem statement. Can you guess what it is?